No State, Just Gossip! Making tunnelto.dev distributed

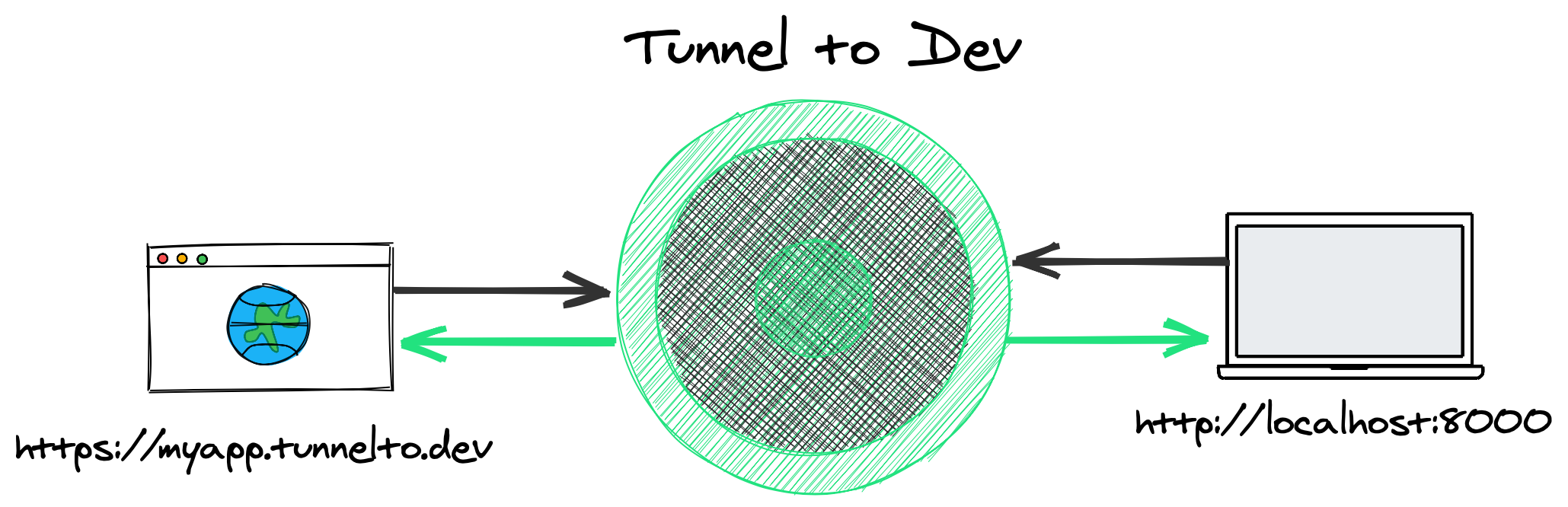

Often the best way to debug a problem in a web app, is to deploy the change and test it live. Deploying is slow and sometimes not possible, but why not just test against your server running on localhost? Tunnelto.dev is a simple developer utility that lets you expose your local web server to the internet with a public URL. Use it to test and build your Webhook integration for Slack or Stripe, or use the public URL on an iPhone app so it works in demos on any network.

Tunnelto.dev is certainly not the first of its kind, it's an alternative to tools like ngrok. However, unlike the others, both the tunnelto.dev CLI and server are 100% open source on GitHub so there's no vendor lock-in. (Also it’s built in Rust.) It's perfect for use in the enterprise when you can't breach the corporate network. If you don't want to host it yourself, then you can use our hosted version.

Tunnelto started off as an open source Rust project — just code. But then I met Fly and I fell in love. Fly.io is a platform-as-a-service for deploying code, literally any kind of code, and it gets dynamically replicated all over the world. Basically you can quickly spin up Docker containers in any number of regions either purposefully or based on live user demand. They make it insanely easy to create production grade services with a couple of lines in a TOML file.

I had never intended to even run a hosted version of tunnelto.dev — but Fly made it so easy that I just couldn't resist.

Digging a tunnel

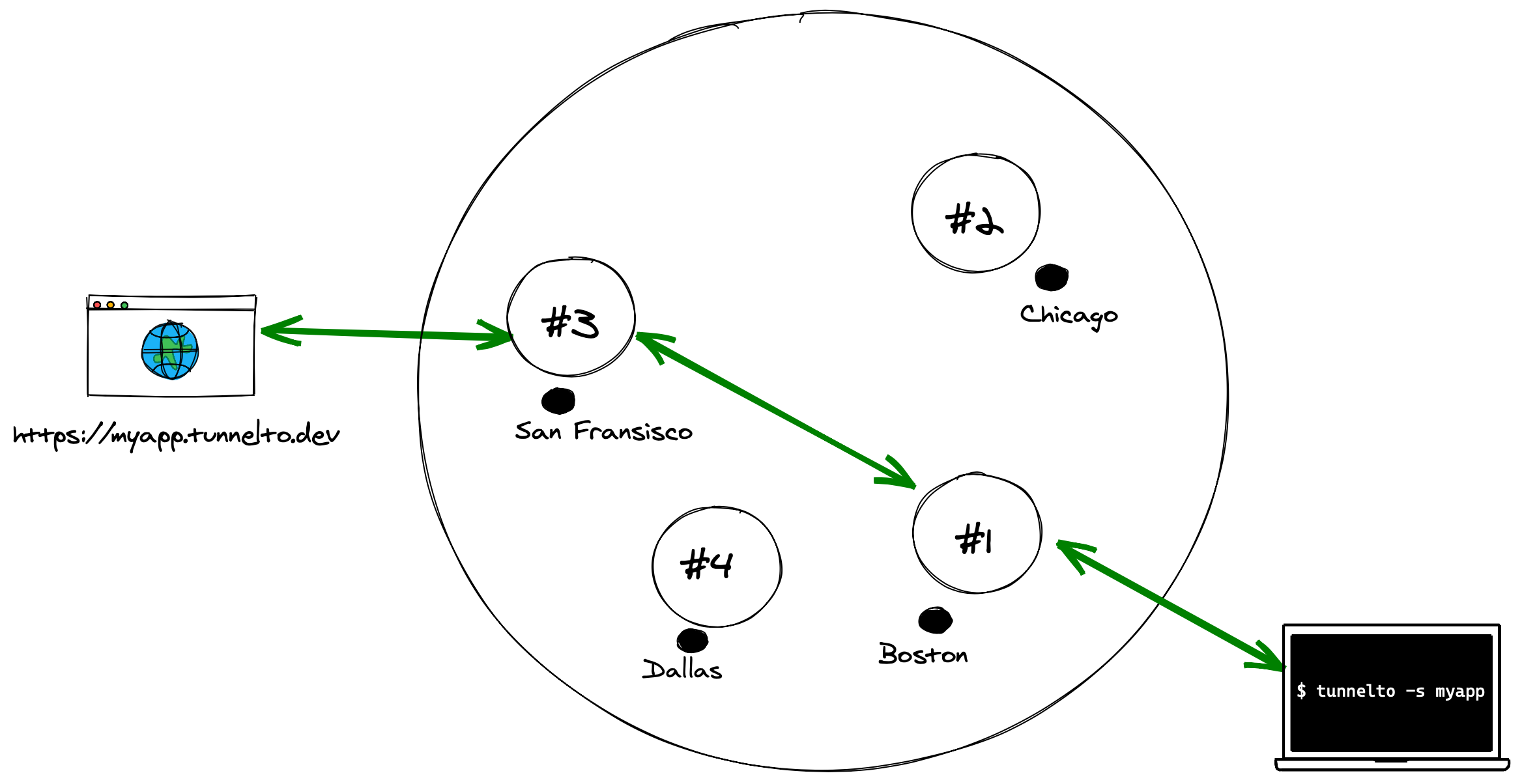

Running tunnelto --subdomain myapp --port 8000 opens a persistent connection to our servers running on https://fly.io using WebSockets. The tunnelto CLI proxies HTTP traffic

to a

locally

running server on port 8000. When you go to https://myapp.tunnelto.dev you'll see your locally running server on the public web!

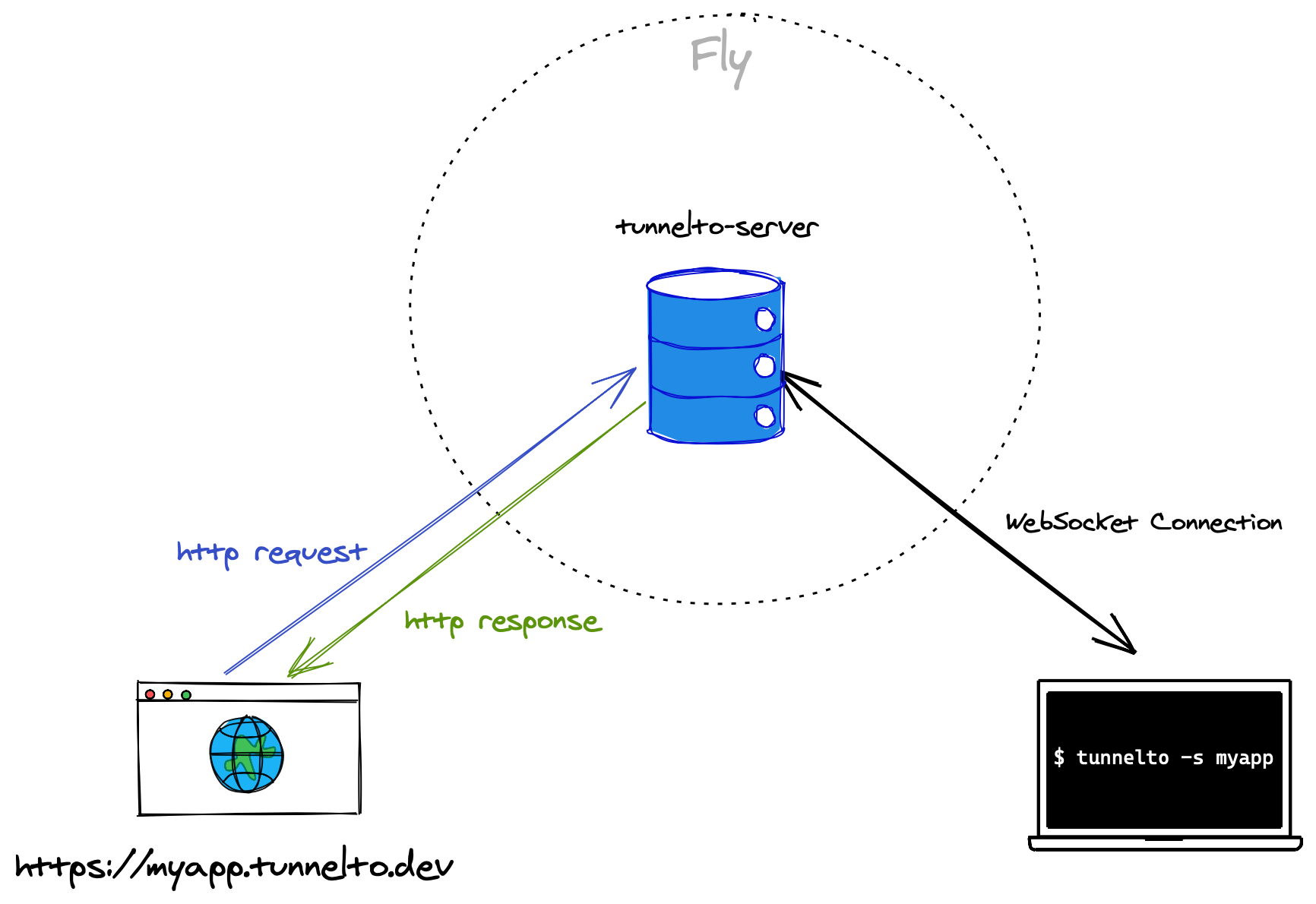

When anyone fires off a request to myapp.tunnelto.dev, the tunnelto server peeks at the host and identifies the corresponding tunnelto CLI WebSocket connection that wants to serve the myapp subdomain.

Next, the entire HTTP stream is wrapped inside of the WebSocket connection, and sent down to the tunnelto CLI. The tunnelto CLI forwards that wrapped HTTP stream to http://localhost:8000.

Similarly, when your localhost server responds — the tunnelto CLI wraps the stream inside of the WebSocket connection back up to the tunnelto server. Finally, the tunnelto server returns this HTTP stream as the response to the original request.

In essence, there are two levels of proxy servers: the first leg happens at the tunnelto server which proxies HTTP streams over WebSockets (effectively HTTP over HTTP) and the second proxy happens

on your local machine between the tunnelto CLI and http://localhost:8000.

Anywhat?

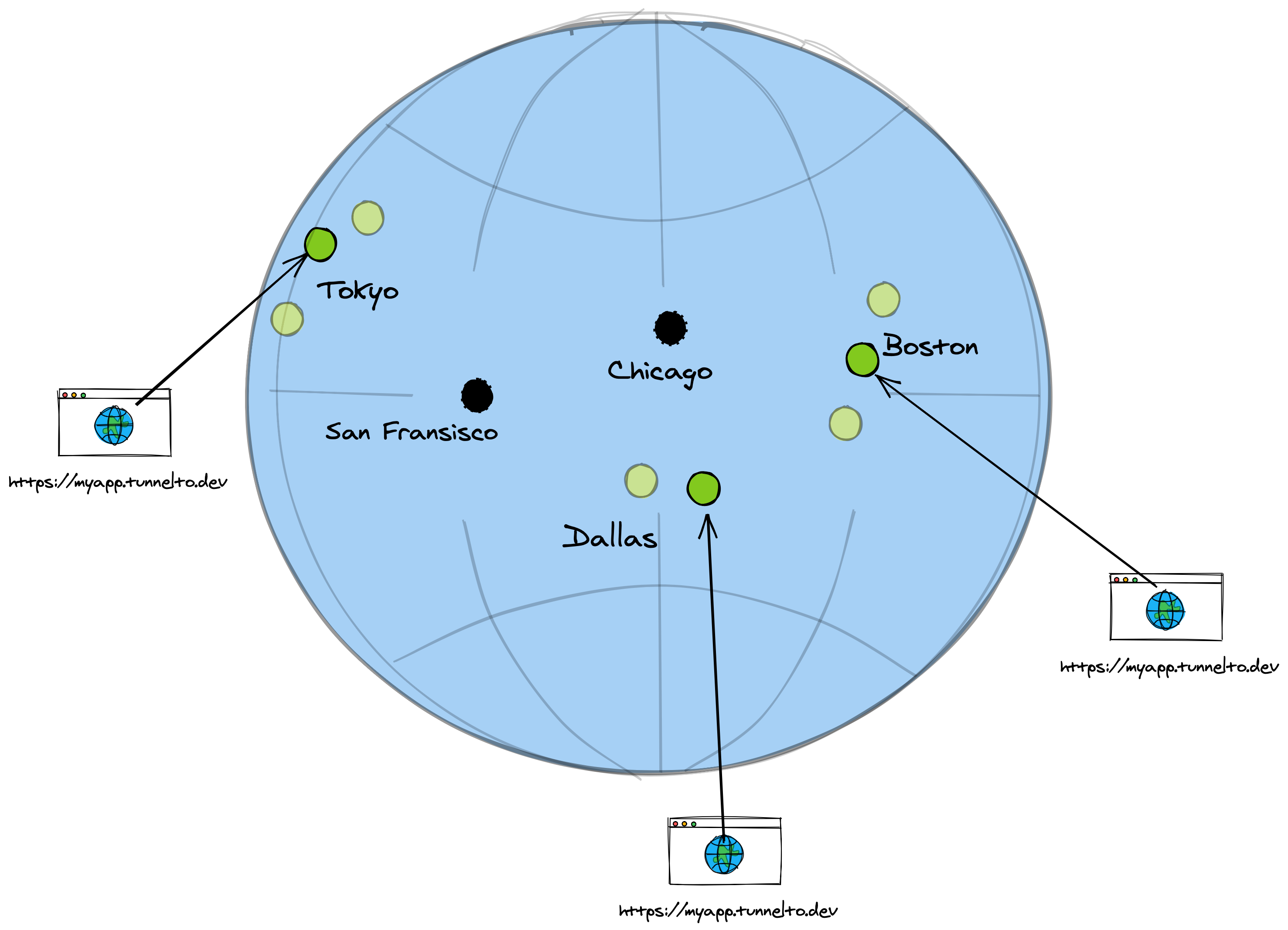

So what happens when we turn on fly autoscale and let Fly magically spin up many instances of our tunnelto server in many different geographies based on demand?

Fly gives our tunnelto server a fixed IP address from an Anycast block which enables the "magical internet routing engine" (BGP) to pick a route to a

particular instance of our running tunnelto server with the shortest path. That is to say, we have many instances of tunnelto-server running in various datacenters — our tunnelto server has just one

anycast IP address — and all clients will be dynamically routed to the "best" instance.

Who has that Tunnel?

Say you connect to myapp.tunnelto.dev and Fly routes to instance-3 in Dallas but the WebSocket connection for the myapp client is actually running on instance-7 in Boston. How do

these neighbors find out about each other?

Idea: Global State

Each instance needs to know where a target tunneling client is connected to — what tunnelto server instance is persisting the WebSocket connection. So let's do something boring: add a database. Well, this is a distributed system, so lets use a distributed KV (Key-Value) Store (like Redis).

Our key value store can be simple: map subdomain -> IP. When an instance gets a request on a particular subdomain, it looks up the IP address in our KV store, and then forward proxies the connection to that instance IP.

But how do we keep this KV store in sync? Every time a new tunneling client connects (on a WebSocket connection) we must update our KV store. This might seem fairly straightforward, but instances can go down all the time — in fact a WebSocket connection can restart for a number of reasons and connect to a new instance entirely. This means we frequently need to write to this KV store. We also must not rely on perfect cleanup (removing subdomain keys) as an instance can go down at moments notice.

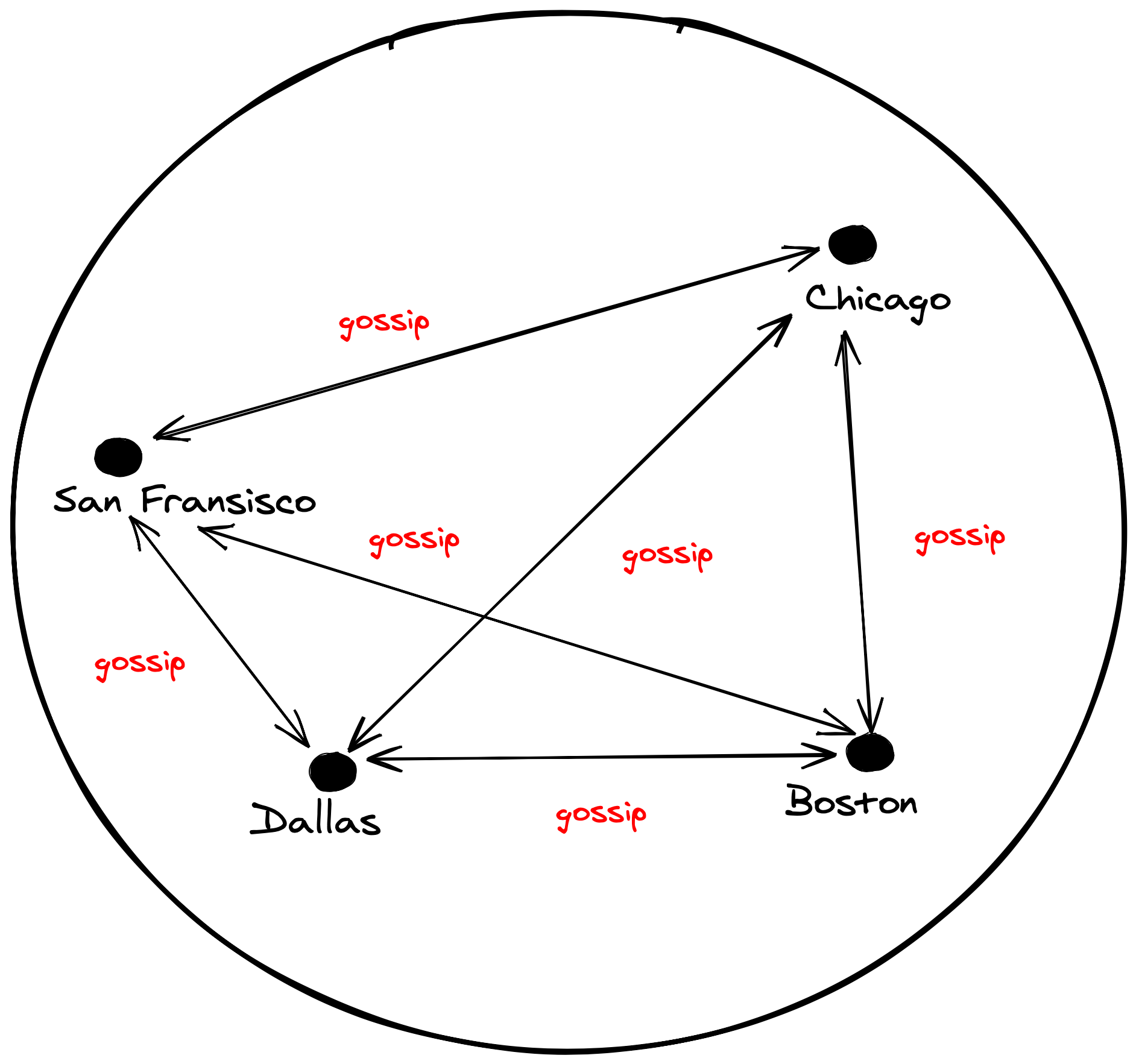

Distributed databases, like CockroachDB or Redis, use gossip protocols to keep nodes in sync. By adding Redis we're adding a whole new system — a distributed system — that needs to stay in sync or else we'll get stale answers and forward the request to the wrong place.

But we don't need to rely on an external system to do this. With Fly, this connectivity is built securely into the platform.

Gossip with your neighbors

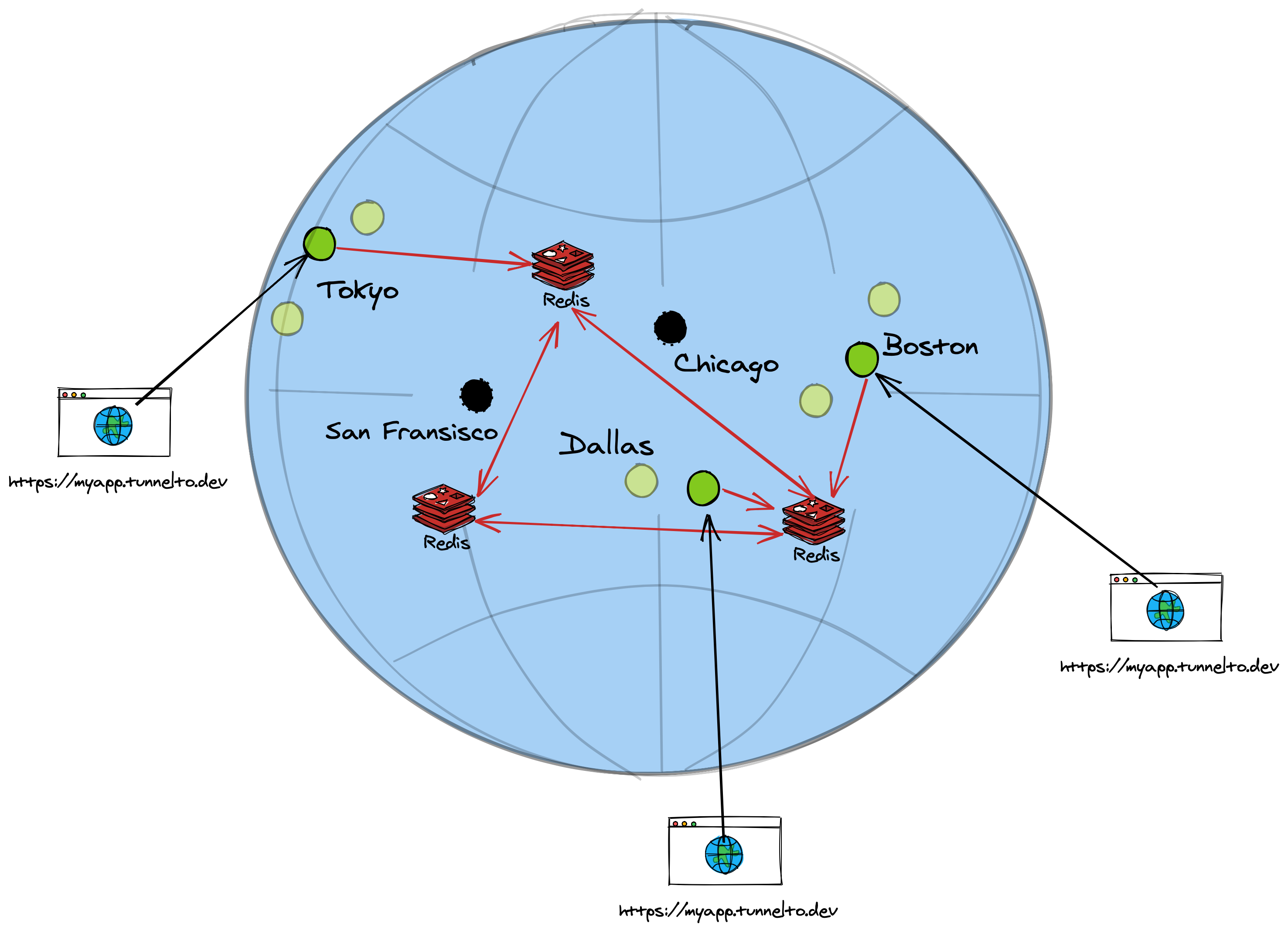

Let's cut out the middle man and just gossip! Instead of deploying another system to keep our data in sync, we can dynamically get the answers we need by going to the actual source of truth. The time it takes to get our answer equals the time it takes to send a request to the instance hosting the particular subdomain in question. This is a good answer because we'll need to connect to that instance to proxy traffic to it regardless.

Fly's Private Networking to the rescue!

A couple of months ago fly.io released a slick new feature called 6PN (IPv6 Private Networking). In short, each instance of your server has access to every other instance via a WireGuard mesh network. Discoverability is easy: just query a special DNS address and you'll get the IPs for each instance of your app.

Basically, you get secure communication and discoverability for free!

Ask and you shall receive

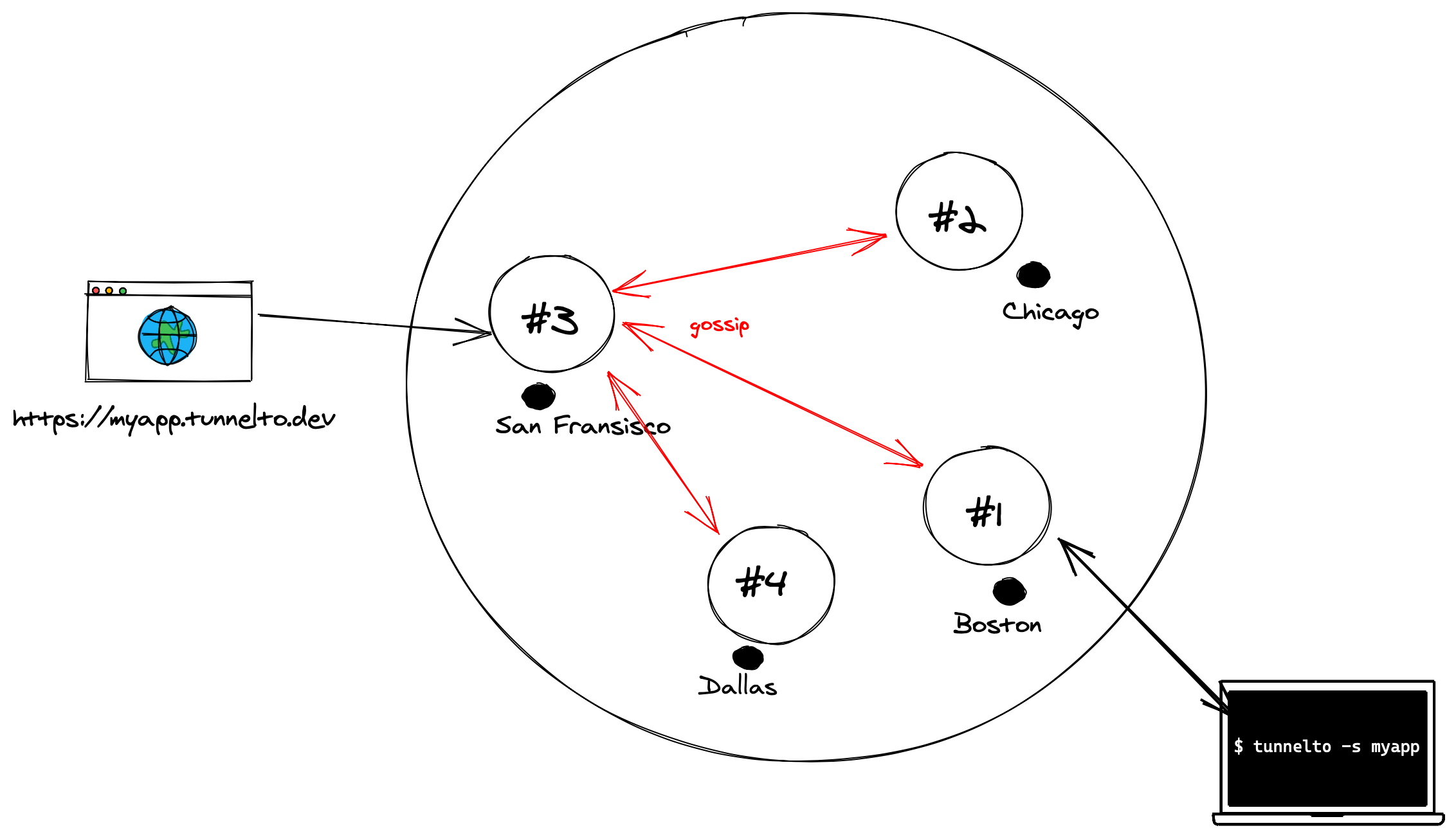

Instead of a global state, we can simply gossip. An instance sends a query to each other instance asking if it hosts the subdomain in question.

- Accept an arbitrary HTTP connection on any subdomain, like

myapp.tunnelto.dev - Query the 6PN DNS server for a list of instance IPs

- Send, in parallel, to each instance a simple HTTP request containing the subdomain and select the host that answers "yes" (thank you tokio.rs!)

- Proxy the HTTP traffic to the selected instance

- The selected instance proxies this HTTP traffic through the WebSocket connection onto the client

It took about 150 lines of code in Rust all in to make tunnelto.dev distributed! In fact the first version of tunnelto was not distributed, it was designed only to run on a single VM. I never anticipated I would make fully distributed — until Fly.io forced my hand by making it so damn easy.

Advanced Gossiping

Tunnelto's use of "gossip" is rather simple. It uses a one-way broadcast mechanism and simple "yes" or "no" responses to get the data each instance needs. You can imagine, however, we might be able to optimize things further by having instances constantly communicate with each other in a more complex protocol. We opted to keep it simple, but for more "fun" gossip techniques, take a look at SWIM (Scalable Weakly-consistent Infection-style Process Group Membership Protocol). There's even a Rust library for it!

Thanks to Thomas Ptacek and Kurt Mackey for reading drafts of this.

Check out fly.io — you should be able to deploy an app on the platform in just a couple of minutes!

Want to try tunnelto.dev? Use code

FLYROCKSto get one month free.